Artificial Intelligence (AI) and Large Language Models (LLMs) have revolutionized data processing, analysis, and decision-making. DeepSeek, an emerging AI model, offers powerful capabilities for various applications, including text generation, code completion, and complex problem-solving. Integrating DeepSeek with Microsoft Azure provides scalability, flexibility, and cloud-powered efficiency. In this guide, we will explore how to set up and use DeepSeek in Azure and the Azure Model Catalog.

Prerequisites

Before you begin, ensure you have the following:

- An active Microsoft Azure account

- Azure Virtual Machine (VM) or Azure Kubernetes Service (AKS)

- DeepSeek model files (if using a self-hosted approach)

- Python and necessary libraries installed

Step 1: Choose an Azure Compute Service

DeepSeek requires significant computational power, so selecting the right compute service is crucial. Here are two recommended options:

1. Azure Virtual Machines (VMs)

- Suitable for dedicated instances

- Recommended VM sizes: ND A100 v4, NCas T4 v3, or Standard_D family

- Supports NVIDIA GPUs for AI workloads

2. Azure Kubernetes Service (AKS)

- Best for scalable deployments

- Supports distributed computing

- Ideal for hosting DeepSeek as an API endpoint

Step 2: Deploy an Azure Virtual Machine

For a quick setup, you can deploy DeepSeek on an Azure VM:

- Go to the Azure Portal and navigate to Virtual Machines.

- Click Create > Azure Virtual Machine.

- Choose an image (Ubuntu 20.04 LTS is recommended).

- Select a size with GPU capabilities.

- Configure networking to allow SSH and necessary ports.

- Review and create the VM.

- Once deployed, connect via SSH:

ssh azureuser@your-vm-ip

Step 3: Install Dependencies

After accessing your VM, install the required dependencies:

sudo apt update && sudo apt upgrade -y

sudo apt install python3 python3-pip git

pip3 install torch transformers accelerateIf using CUDA-enabled GPUs, install NVIDIA drivers and CUDA:

sudo apt install nvidia-driver-525

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118Step 4: Download and Run DeepSeek Model

Clone the DeepSeek repository and download the model:

git clone https://github.com/deepseek-ai/deepseek

cd deepseek

python3 download_model.py --model deepseek-llmRun DeepSeek in inference mode:

python3 deepseek_infer.py --model deepseek-llm --input "Hello, how can I help you?"Step 5: Deploy DeepSeek as an API

To access DeepSeek remotely, deploy it as a REST API using FastAPI:

pip3 install fastapi uvicornCreate a new Python script app.py:

from fastapi import FastAPI

import deepseek

app = FastAPI()

model = deepseek.load_model("deepseek-llm")

@app.post("/generate/")

def generate_text(prompt: str):

response = model.generate(prompt)

return {"response": response}Run the API:

uvicorn app:app --host 0.0.0.0 --port 8000Now, DeepSeek is accessible via HTTP requests:

curl -X POST "http://your-vm-ip:8000/generate/" -d '{"prompt":"Write a short poem."}'Step 6: Automate and Scale with Azure Kubernetes Service (AKS)

If you need a scalable setup, use AKS:

- Create an AKS Cluster in Azure.

- Build a Docker container for DeepSeek:

docker build -t deepseek-api . docker run -p 8000:8000 deepseek-api - Push the image to Azure Container Registry (ACR).

- Deploy a Kubernetes pod using the container.

This ensures high availability and load balancing.

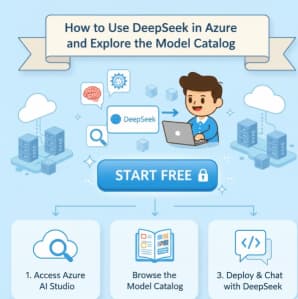

Other approach Using DeepSeek in Azure Model Catalog

Azure Model Catalog allows easy management and deployment of AI models, including DeepSeek.

- If you don’t have an Azure subscription, you can sign up for an Azure account here.

- Search for DeepSeek R1 in the model catalog.

- Open the model card in the model catalog on Azure AI Foundry.

- Click on deploy to obtain the inference API and key and also to access the playground.

- You should land on the deployment page that shows you the API and key in less than a minute. You can try out your prompts in the playground.

- You can use the API and key with various clients.